DarkFi - Anonymous, Uncensored, Sovereign

We aim to proliferate anonymous digital markets by means of strong cryptography and peer-to-peer networks. We are establishing an online zone of freedom that is resistant to the surveillance state.

Unfortunately, the law hasn’t kept pace with technology, and this disconnect has created a significant public safety problem. We call it "Going Dark".

James Comey, FBI director

So let there be dark.

About DarkFi

DarkFi is a new Layer 1 blockchain, designed with anonymity at the forefront. It offers flexible private primitives that can be wielded to create any kind of application. DarkFi aims to make anonymous engineering highly accessible to developers.

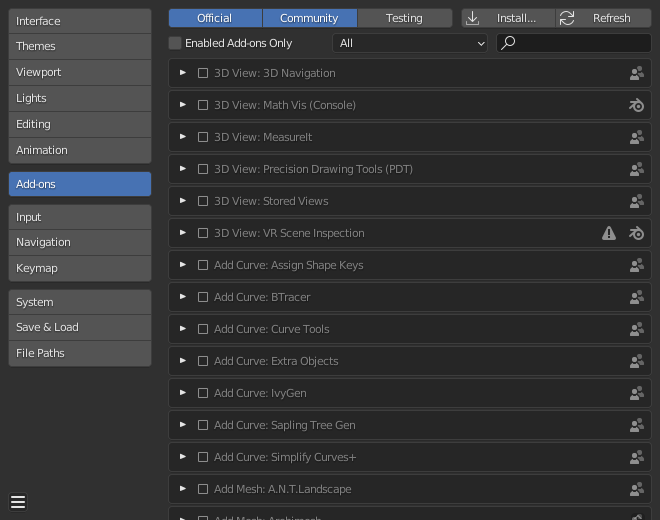

DarkFi uses advances in zero-knowledge cryptography and includes a contracting language and developer toolkits to create uncensorable code.

In the open air of a fully dark, anonymous system, cryptocurrency has the potential to birth new technological concepts centered around sovereignty. This can be a creative, regenerative space - the dawn of a Dark Renaissance.

Connect to DarkFi Alpha Testnet

DarkFi Alpha Testnet is a PoW blockchain that provides fully anonymous transactions, zero-knowledge contracts, anonymous atomic swaps, a self-governing anonymous DAO, and more.

darkfidis the DarkFi fullnode. It validates blockchain transactions and stays connected to the p2p network.drkis a CLI wallet. It provides an interface to smart contracts such as Money and DAO, manages our keys and coins, and scans the blockchain to update our balances.minerdis the DarkFi mining daemon. It connects to darkfid over RPC and triggers commands for it to mine blocks.

To connect to the alpha testnet, follow the tutorial.

Connect to DarkFi IRC

Follow the installation instructions for the P2P IRC daemon.

Build

First you need to clone DarkFi repo and enter its root folder, if you haven't already done it:

% git clone https://codeberg.org/darkrenaissance/darkfi

% cd darkfi

% git checkout v0.5.0

This project requires the Rust compiler to be installed. Please visit Rustup for instructions.

You have to install a native toolchain, which is set up during Rust installation, and wasm32 target. To install wasm32 target, execute:

% rustup target add wasm32-unknown-unknown

Minimum Rust version supported is 1.87.0.

The following dependencies are also required:

| Dependency | Debian-based |

|---|---|

| git | git |

| cmake | cmake |

| make | make |

| gcc | gcc |

| g++ | g++ |

| pkg-config | pkg-config |

| alsa-lib | libasound2-dev |

| clang | libclang-dev |

| fontconfig | libfontconfig1-dev |

| lzma | liblzma-dev |

| openssl | libssl-dev |

| sqlcipher | libsqlcipher-dev |

| sqlite3 | libsqlite3-dev |

Users of Debian-based systems (e.g. Ubuntu) can simply run the following to install the required dependencies:

# apt-get update

# apt-get install -y git cmake make gcc g++ pkg-config libasound2-dev libclang-dev libfontconfig1-dev liblzma-dev libssl-dev libsqlcipher-dev libsqlite3-dev

Alternatively, users can try using the automated script under contrib

folder by executing:

% sh contrib/dependency_setup.sh

The script will try to recognize which system you are running, and install dependencies accordingly. In case it does not find your package manager, please consider adding support for it into the script and sending a patch.

Lastly, we can build the necessary binaries using the provided

Makefile, to build the project. If you want to build specific ones,

like darkfid or darkirc, skip this step, as it will build

everything, and use their specific targets instead.

% make

Development

If you want to hack on the source code, make sure to read some introductory advice in the DarkFi book.

Installation (Optional)

This will install the binaries on your system (/usr/local by

default). The configuration files for the binaries are bundled with the

binaries and contain sane defaults. You'll have to run each daemon once

in order for them to spawn a config file, which you can then review.

# make install

Examples and usage

See the DarkFi book

Go Dark

Let's liberate people from the claws of big tech and create the democratic paradigm of technology.

Self-defense is integral to any organism's survival and growth.

Power to the minuteman.

Start Here

Directory Structure

DarkFi loosely follows the standardized Unix directory structure.

- All bundled applications are contained in

bin/subdirectory. - Random scripts and helpers such as build artifacts, node deployment

or syntax highlighting is in

contrib/. - Documentation is in

doc/. - Example codes are in

example/. - Script utilities are in

script/. See also the largescript/research/subdir. - All core library code is contained in

src/. See Architecture Overview for a detailed description.- The

src/sdk/crate is used by WASM contracts and core code. It contains essential primitives and code shared between them. src/serial/is a crate containing the binary serialization code.src/contract/contains our native bundled contracts. It's worth looking at these to familiarize yourself with what contracts on DarkFi are like.

- The

Using DarkFi

Refer to the main README file for instructions on how to install Rust and necessary dependencies.

Then proceed to the Running a Node guide.

Join the Community

Although we have a Telegram, we don't believe in centralized proprietary apps, and our core community organizes through our own fully anonymous p2p chat system which has support for Tor and i2p.

Every Monday at 14:00 UTC (DST) or 15:00 UTC (ST) in #dev we have our main project meeting.

See the guide on darkirc for instructions on joining the chat.

Contributing as a Dev

Check out the contributor's guide for where to find tasks and submitting patches to the project.

If you're not a dev but wish to learn then take a look at the agorism hackers study guide.

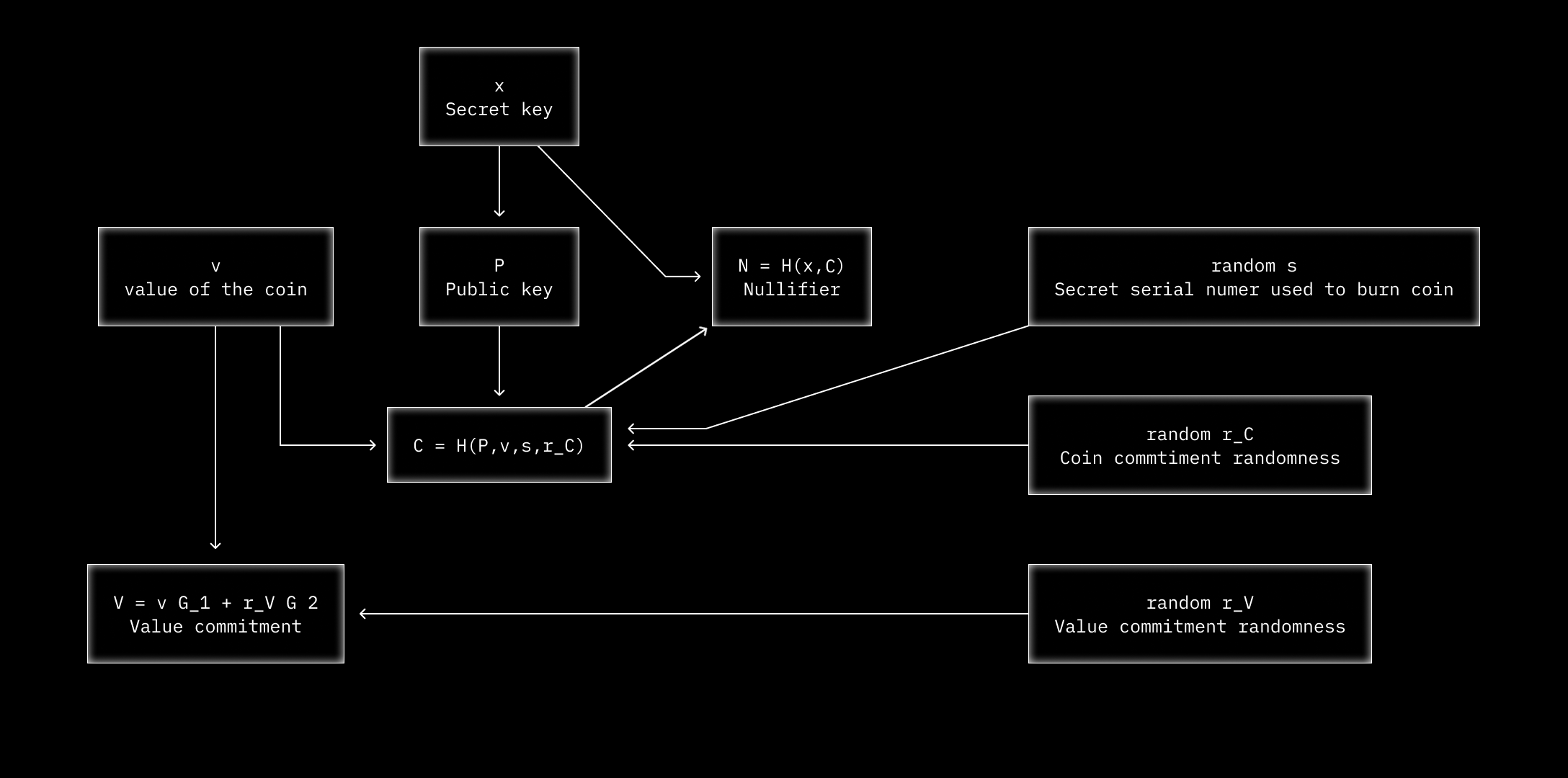

Lastly familiarize yourself with the project architecture. The book also contains a cryptography section with a helpful ZK explainer.

DarkFi also has a project spec and a DEP (DarkFi Enhancement Proposals) system.

Detailed Overview

Source code is under src/ subdirectory. Main interesting modules are:

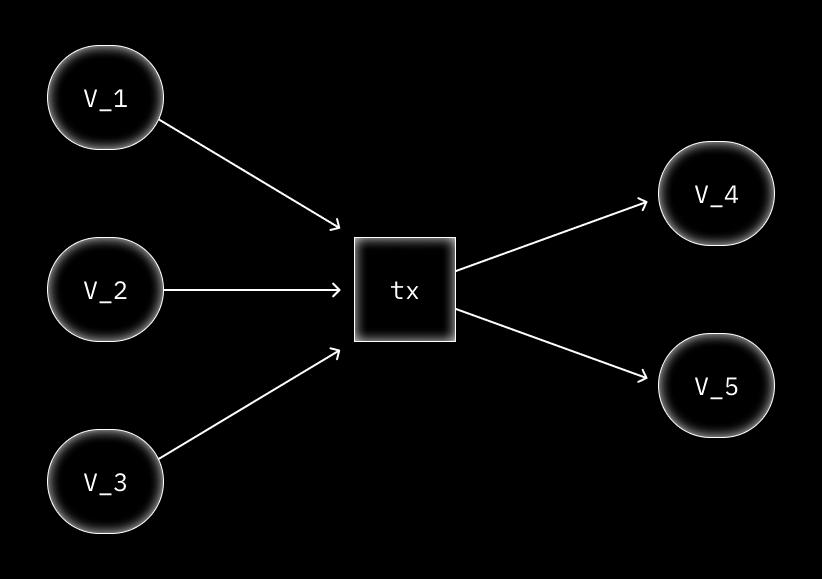

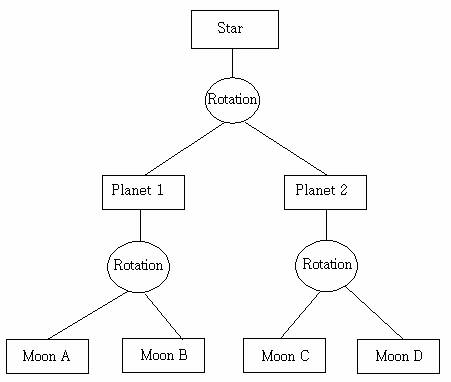

net/is our own p2p network. There are sessions such as incoming or outgoing that have channels (connections). Protocols are attached to channels depending on the session. The p2p network is also multi-transport with support for TCP (+TLS), Tor and i2p. So you can access the p2p fully anonymously (network level privacy).event_graph/which is a DAG sync protocol used for ensuring eventual consistency of data, such as with chat systems (you don't drop any messages).runtime/is the WASM smart contract engine. We separate computation into several stages which is checks-effects-interactions paradigm in solidity but enforced in the smart contract explicitly. For example in theexec()phase, you can only read, whereas writes must occur in theapply(update)phase.blockchain/andvalidator/is the blockchain and consensus algos.zk/is the ZK VM, which is simply loads bytecode which is used to build the circuits. It's a very simple model rather than the TinyRAM computation models. We opted for this because we prefer simplicity in systems design.sdk/contains a crypto SDK usable in smart contracts and applications. There are also Python bindings here, useful for making utilities or small apps.serial/contains our own serialization because we don't trust Rust serialization libs like serde. We also have async serialization and deserialization which is good for network code.tx/is the tx we use. Note signatures are not in the calldata as having this outside it allows more efficient verification (since you can do it in parallel and so on).- All DarkFi calls are precomputed ahead of time which is needed for ZK. Normally in ETH or other smart contract chains, the calldata is calculated where the function is invoked. Whereas in DarkFi the entire callgraph and calldata is bundled since ZK proofs must be computed ahead of time. This also improves things like static analysis and security (limiting call depth is easy to check before verification).

- Verifying sigs or call depth ahead of time helps make the chain more attack resistant.

contract/contains our native smart contracts. Namely:money, which is multi-asset anonymous transfers, anonymous swaps and token issuance. The token issuance is programmatic. When creating a token, it commits to a smart contract which specifies how the token is allowed to be issued.deployfor deploying smart contracts.dao, which is a fully anonymous DAO. All the DAOs on chain are anonymous, including the amounts and activity of the treasury. All participants are anonymous, proposals are anonymous and votes are anonymous including the token weighted vote amount, and user identity. You cannot see who is in the DAO.

NOTE: We try to minimize external dependencies in our code as much as possible. We even try to limit dependencies within submodules.

Inside bin/ contains utilities and applications:

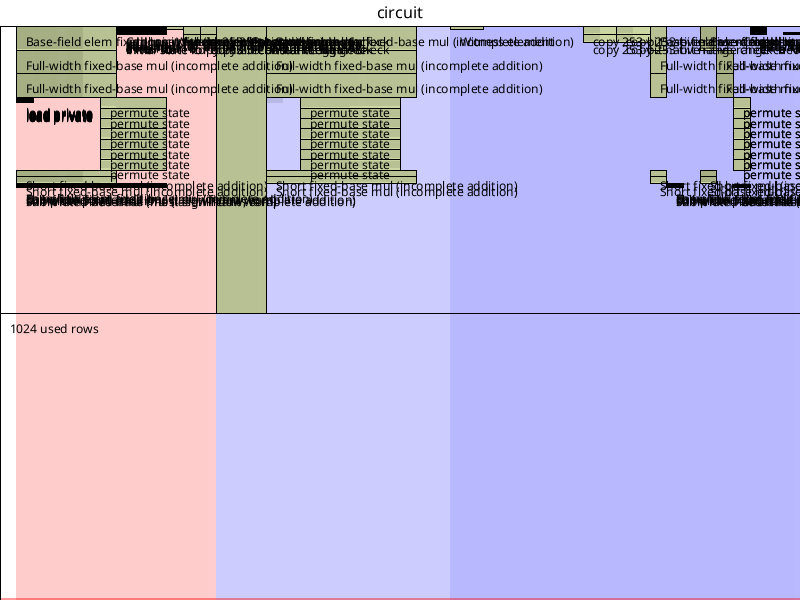

darkfid/is the main daemon anddrk/is the wallet.dnet/is a viewer to see the p2p traffic of nodes, anddeg/is a viewer for the event graph data. We use these as debugging and monitoring tools.dhtd/is a distributed hash table, like IPFS, for transferring static data and large files around. Currently just a prototype but we'll use this later for images in the chat or other static content like seller pages on the marketplace.tau/is an anon p2p task manager which we use. We don't use Github issues, and seek to minimize our dependence on centralized services. Eventually we want to be fully p2p and attack resistant.darkirc/is our main community chat. It uses RLN; you stake money and if you post twice in an epoch then you get slashed which prevents spam. There is a free tier. It uses theevent_graphfor synchronizing the history. You can attach any IRC frontend to use it.zkas/is our ZK compiler.zkrunner/contains our ZK debugger (runzkrunnerwith--trace), andzkrenderwhich renders a graphic of the circuit layout.lilith/is a universal seed node. Eventually we will add swarming support to our p2p network which is an easy addon.

Lastly worth taking a look is script/research/ and

script/research/zk/ which contains impls of most major ZK algos.

bench/ contains benchmarks. script/escrow.sage is a utility for

doing escrow. We'll integrate it in the wallet later.

Our design philosophy and simplicity oriented approach to systemd dev:

- Suckless Philosophy: software that sucks less

- How to Design Perfect (Software) Products by Pieter Hintjens

Recent crypto code audit: ZK Security DarkFi Code Audit

Useful link on our ZK toolchain

For proof files, see proof/ and src/contract/*/proof/ subdirs.

DarkFi Philosophy

State Civilization and the Democratic Nation

State civilization has a 5000 year history. The origin of civilizations in mesopotamia experienced a cambrian explosion of various forms. The legacy of state civilization can be traced back to ancient assyria which was essentially a military dictatorship that mobilized all of society's resources to wage war and defeat other civilizations, enslaving them and seizing their wealth.

Wikipedia defines civilization:

Civilizations are organized densely-populated settlements divided into hierarchical social classes with a ruling elite and subordinate urban and rural populations.

Civilization concentrates power, extending human control over the rest of nature, including over other human beings.

However this destiny of civilization was not inherent as history teaches us. This definition is one particular mode of civilization that became prevalent. During human history there has been a plethora of forms of civilizations. Our role as revolutionaries is to reconstruct the civilizational paradigm.

The democratic nation is synonymous with society, and produces all value including that which the state extracts. Creative enterprise and wealth production originates in society from small scale business, artisans and inventors, and anybody driven by intent or ambition to create works.

Within society, there are multiple coexisting nations which are communities of people sharing language, history, ethnicity or culture. For example there could be a nation based on spiritual belief, a nation of women, or a distinct cultural nation.

The nation state is an extreme variant of the state civilization tendency. Like early state civilizations, the development of the French nation-state was more effective at seizing the wealth of society to mobilize in war against the existing empires of the time.

Soon after, the remaining systems were forced to adopt the nation state system, including its ideology of nationalism. It is no mistake that the nation state tends towards nationalism and fascism including the worst genocides in human history. Nationalism is a blind religion supplanting religious ideologies weakened by secularism. However nationalism is separate from patriotism.

Loving one's country for which you have struggled and fought over for centuries or even millenia as an ethnic community or nation, which you have made your homeland in this long struggle, represents a sacred value.

~ Ocalan's "PKK and Kurdish Question in the 21st century"

Defining the State

The state is a centralized organization that imposes and enforces rules over a population through the monopoly on the legitimate use of violence. In discussing the rise of militarism, Ocalan says:

Essentially, he attempted to establish his authority over two key groups: the hunters at his side and the women he was trying to confine to the home. Along the way, as shamans (proto-priests) and gerontocratic elements (groups of elders) joined the crafty strongman, the first hierarchical authority was formed in many societies in various forms.

Section called "Society’s Militarism Problem" from "The Sociology of Freedom"

The state is defined as:

- Ideological hegemony represented by a system of priests and shamans in pre-state formations. Today this is the media industry, schools, and big tech (for example "fake news" and surveillance systems).

- Monopolization on the use of violence through military and police. Powerful commanders like Gilgamesh conquered neighbouring tribes, creating nascent state polities whose main business model was to hunt and enslave barbarian tribes. This enabled state to expand agricultural output which they seized through taxation.

- State bureaucracy and administration represented in tribal societies and proto-states through a council of elders. This arm of the state also includes scientific research institutes, state psychology groups and various forms of manipulation including modern AI and data harvesting. This tendency is a misappropriation of scientific methods to serve power, thus retarding scientific and technological development and impoverishing society by depriving it of its great benefits.

The state is a parasite on society, extracting value created. There are many historical stateless societies such as the Medean Confederation, the Iroquiois Confederation, the ninja republics of Iga and Kōga, the Swiss pre-Sonderbund Confederation Helvetica, and Cossack society.

Government is a system or people administrating a society. Although most governments are nation-states, we can create stateless societies with autonomous governments. Within a free society government is local, broadly democratic, and autonomous. Society is widely engaged at every level in decision making through local people's councils, as well as possessing the use of violence through a system of gun ownership and local militias. Government also answers to a network of various interest groups from various nations such as women or youth.

Modernity and the Subject of Ideology

Modernity was born in the "age of reason" and represents the overturning of prevailing religious ideas and rejection of tradition in favour of secularization. Modernity has a mixed legacy which includes alienation, commodity fetishism, scientific positivism, and rationalism.

During this period of modernity 4 major ideologies developed, each with their own specific subject as its focus.

- Liberalism and its subject of the individual. Individuals are atomic units existing under the state system which guarantees them certain bargains in the form of laws and rights.

- Communism which focused on the concept of class warfare and economic justice through state power.

- Fascism which put the volk or state at the center of society, whereby all resources would be mobilized to serve the state.

- Anarchism with its critiques of power and favouring social freedom.

The particular form of modernity that is predominant can be characterized as capitalist modernity. Capitalism, otherwise referred to by others as corporatism can be likened to a religion, whereby a particular elite class having no ideology except self profit uses the means of the state to protect its own interests and extract the wealth of society through nefarious means. In some ways, it is a parasite on the state.

Agorist free markets is the democratic tendency of economy. The word 'economy' derives from the Ancient Greek 'oikonomos' which means household management. Economy was, during ancient periods, connected with nature, the role of motherhood, life, and freedom. It was believed that wealth was derived from the quality of our lived environments. The Kurds use the feminine word 'mal' to refer to the house, while the masculine variant refers to actual property. Later during the Roman period, economy came to be understood as the accumulation of property in the form of number of slaves owned, amount of land seized, or the quantity of money.

The subject of our ideology is the moral and political society. Society exists within a framework of morality and politics. Here we use politics to refer not to red team vs blue team, but instead all social activity concerned with our security, necessity, and life. A society can only be free when it has its own morality and politics. The state seeks to keep society weak by depriving it of morality and replacing society's politics with statecraft.

The Extinction of Humanity

During the 20th century, liberals, communists, and fascists vyed for state power each promising a new form of society. State power existed with these external ideological justifications.

With the end of the Soviet Union, and the end of history (according to liberalism), state power morphed into pure domination and profit. The system simply became a managerial form of raw power over society without purpose or aim. Wars such as the Iraq War were invented by neoliberals to breathe a new purpose into society. Indeed, there is no more effective means to support despotism than war.

Today the military industrial complex has grown into a gigantic leviathan that threatens the world driving economies into an ever greater spiral of desperation. The push for the development of automated weapons, aerial drones, and ninja missiles that eliminate all space for human resistance against tyranny.

Meanwhile social credit scoring is being introduced as CBDCs with incentivization schemes such as UBI. Such systems will give more effective means for the seizure of wealth by the state from society, centralizing economic power within an already deeply corrupt elite class.

Liberal ideologies have made people indifferent to their own situation, turning society inward and focused on social media, unable to organize together to oppose rising authoritarianism. Liberalism is essentially a tendency towards extinction or death.

Nature, Life, Freedom

Nature is the center of spiritual belief. Humanity is an aspect of nature. Nature is more than the number of trees. It is the going up, the ascending of life. Through struggle, we overcome obstacles, becoming harder and stronger along the way. This growth makes us more human and closer with nature.

People naturally feel an empathy with nature such as when an animal is injured or generous feelings towards the young of any species. This feeling was put in us by evolution. We feel an attachment and empathy towards our lived environment and want to see it improve. This feeling is mother nature speaking through us. The more in touch we are with this deeper feeling, the more free we are since we are able to develop as higher human beings.

Freedom does not mean the ability to be without constraint or enact any wild fantasy at a moment's notice. Freedom means direct conscious action with power that drives us to ascend. Nature is full of interwoven threads of organisms vying for influence or power, with time doing an ordered dance punctuated by inflection points of change. It is during these moments that our ability of foresight and prescience allows us to deeply affect events along alternative trajectories.

Re-Evaluating Anarchism

Anarchists had the correct critique & analysis of power. In particular seeing that the nation state would grow into a monster that would consume society. However they were the least equipped of all the other ideologies during modernity to enact their vision and put their ideas into practice.

- They fell victim to the same positivist forces that they claimed to be fighting against.

- They lacked a coherent vision and had little strategy or roadmap for how the revolution would happen.

- Their utopian demand that the state must be eliminated immediately and at all costs meant they were not able to plan how that would happen.

- Their opposition to all forms of authority, even legitimate leadership, meant they were ineffective at organizing revolutionary forces.

Revolutionary Objectives

- Our movement is primarily a spiritual one. One cannot understand Christianity by studying its system of churches, since primarily it is a body of teachings. Likewise, the core of our movement is in our philosophy, ideas, and concepts, which then inform our ideas on governance and economics.

- We must build a strong intellectual fabric that inoculates us and fosters resilience, as well as equipping us with the means to be effective in our work.

- There are two legacies in technology, one informed by state civilization and the other by society. The technology we create is to solve problems that society and aligned communities have.

Discussion group

From Wednesday 1st May 2024-12th March 2025, there were biweekly

philosophy meetings in darkirc in

#philosophy.

The meetings are now on pause due to more pressing concerns (shipping DarkFi).

Meeting schedule:

| Title | Date | Author |

|---|---|---|

| Free software philosophy and open source | 1st of May, 2024 | Tere Vadén, Niklas Vainio |

| Do Artifacts Have Politics? | 16th of May, 2024 | Langdon Winner |

| The Moral Character of Cryptographic Work | 26th of June, 2024 | Philip Rogaway |

| Gold and Glory in Times of Thought-Chaos | 10th of July, 2024 | Charlotte Fang |

| The Rainbow and the Worm | 24th of July, 2024 | Mae-Wan Ho |

| Squad Wealth | 7th of August, 2024 | Sam Hart, Toby Shorin, Laura Lotti |

| Infocracy: Digitization and the Crisis of Democracy | 4th of September, 2024 | Byung-Chul Han |

| The System's Neatest Trick | 18th of September, 2024 | Theodore Kaczynski |

| Rescheduled | 2nd of October, 2024 | |

| Political Theology | 16th of October, 2024 | Carl Schmitt |

| Ganienkeh Manifesto | 30th of October, 2024 | Mohawk Nation |

| Rescheduled | 13th of November, 2024 | |

| A New Nationalism for the New Ireland/Complimentary Reading - Solutions to the Northern Ireland Problem | 27th of November, 2024 | Desmond Fennell |

| Two Cheers for Anarchism - Chapter 2, starting at 'Fragment 7', all of Chapter 4 | 11th of December, 2024 | James C. Scott |

| Rescheduled | 25th of December, 2024 | |

| Will to Power - Extracts (see PDF) | 8th of January, 2025 | Friedrich Nietzsche |

| Post Capitalist Desire, Lecture 1 - from 1:11:30 to 2:42:00 on Youtube or pages 35 to 78 in the book | 22nd of January, 2025 | Mark Fisher |

| Algorithmic Sovereignty Core reading: Chapter 1 & 2 | 5th of February, 2025 | Jaromil |

| Economic Consequences of Organized Violence | 19th of February, 2025 | Frederick Lane |

| Assassination Politics | 12th of March, 2025 | Jim Bell |

Feel free to propose a text for a future meeting by sharing it in #philosophy.

Definition of Democratic Civilization

From 'The Sociology of Freedom: Manifesto of the Democratic Civilization, Volume 3' by Abdullah Ocalan.

Annotations are our own. The text is otherwise unchanged.

What is the subject of moral and political society?

The school of social science that postulates the examination of the existence and development of social nature on the basis of moral and political society could be defined as the democratic civilization system. The various schools of social science base their analyses on different units. Theology and religion prioritize society. For scientific socialism, it is class. The fundamental unit for liberalism is the individual. There are, of course, schools that prioritize power and the state and others that focus on civilization. All these unit-based approaches must be criticized, because, as I have frequently pointed out, they are not historical, and they fail to address the totality. A meaningful examination would have to focus on what is crucial from the point of view of society, both in terms of history and actuality. Otherwise, the result will only be one more discourse.

Identifying our fundamental unit as moral and political society is significant, because it also covers the dimensions of historicity and totality. Moral and political society is the most historical and holistic expression of society. Morals and politics themselves can be understood as history. A society that has a moral and political dimension is a society that is the closest to the totality of all its existence and development. A society can exist without the state, class, exploitation, the city, power, or the nation, but a society devoid of morals and politics is unthinkable. Societies may exist as colonies of other powers, particularly capital and state monopolies, and as sources of raw materials. In those cases, however, we are talking about the legacy of a society that has ceased to be.

Individualism is a state of war

There is nothing gained by labeling moral and political society—the natural state of society—as slave-owning, feudal, capitalist, or socialist. Using such labels to describe society masks reality and reduces society to its components (class, economy, and monopoly). The bottleneck encountered in discourses based on such concepts as regards the theory and practice of social development stems from errors and inadequacies inherent in them. If all of the analyses of society referred to with these labels that are closer to historical materialism have fallen into this situation, it is clear that discourses with much weaker scientific bases will be in a much worse situation. Religious discourses, meanwhile, focus heavily on the importance of morals but have long since turned politics over to the state. Bourgeois liberal approaches not only obscure the society with moral and political dimensions, but when the opportunity presents itself they do not hesitate to wage war on this society. Individualism is a state of war against society to the same degree as power and the state is. Liberalism essentially prepares society, which is weakened by being deprived of its morals and politics, for all kinds of attacks by individualism. Liberalism is the ideology and practice that is most anti-society.

The rise of scientific positivism

In Western sociology (there is still no science called Eastern sociology) concepts such as society and civilization system are quite problematic. We should not forget that the need for sociology stemmed from the need to find solutions to the huge problems of crises, contradictions, and conflicts and war caused by capital and power monopolies. Every branch of sociology developed its own thesis about how to maintain order and make life more livable. Despite all the sectarian, theological, and reformist interpretations of the teachings of Christianity, as social problems deepened, interpretations based on a scientific (positivist) point of view came to the fore. The philosophical revolution and the Enlightenment (seventeenth and eighteenth centuries) were essentially the result of this need. When the French Revolution complicated society’s problems rather than solving them, there was a marked increase in the tendency to develop sociology as an independent science. Utopian socialists (Henri de Saint-Simon, Charles Fourier, and Pierre-Joseph Proudhon), together with Auguste Comte and Émile Durkheim, represent the preliminary steps in this direction. All of them are children of the Enlightenment, with unlimited faith in science. They believed they could use science to re-create society as they wished. They were playing God. In Hegel’s words, God had descended to earth and, what’s more, in the form of the nation-state. What needed to be done was to plan and develop specific and sophisticated “social engineering” projects. There was no project or plan that could not be achieved by the nation-state if it so desired, as long as it embraced the “scientific positivism” and was accepted by the nation-state!

Capitalism as an iron cage

British social scientists (political economists) added economic solutions to French sociology, while German ideologists contributed philosophically. Adam Smith and Hegel in particular made major contributions. There was a wide variety of prescriptions from both the left and right to address the problems arising from the horrendous abuse of the society by the nineteenth-century industrial capitalism. Liberalism, the central ideology of the capitalist monopoly has a totally eclectic approach, taking advantage of any and all ideas, and is the most practical when it comes to creating almost patchwork-like systems. It was as if the right- and left- wing schematic sociologies were unaware of social nature, history, and the present while developing their projects in relation to the past (the quest for the “golden age” by the right) or the future (utopian society). Their systems would continually fragment when they encountered history or current life. The reality that had imprisoned them all was the “iron cage” that capitalist modernity had slowly cast and sealed them in, intellectually and in their practical way of life. However, Friedrich Nietzsche’s ideas of metaphysicians of positivism or castrated dwarfs of capitalist modernity bring us a lot closer to the social truth. Nietzsche leads the pack of rare philosophers who first drew attention to the risk of society being swallowed up by capitalist modernity. Although he is accused of serving fascism with his thoughts, his foretelling of the onset of fascism and world wars was quite enticing.

The increase in major crises and world wars, along with the division of the liberal center into right- and left-wing branches, was enough to bankrupt positivist sociology. In spite of its widespread criticism of metaphysics, social engineering has revealed its true identity with authoritarian and totalitarian fascism as metaphysics at its shallowest. The Frankfurt School is the official testimonial of this bankruptcy. The École Annales and the 1968 youth uprising led to various postmodernist sociological approaches, in particular Immanuel Wallerstein’s capitalist world-system analysis. Tendencies like ecology, feminism, relativism, the New Left, and world-system analysis launched a period during which the social sciences splintered. Obviously, financial capital gaining hegemony as the 1970s faded also played an important role. The upside of these developments was the collapse of the hegemony of Eurocentric thought. The downside, however, was the drawbacks of a highly fragmented social sciences.

The problems of Eurocentric sociology

Let’s summarize the criticism of Eurocentric sociology:

-

Positivism, which criticized and denounced both religion and metaphysics, has not escaped being a kind of religion and metaphysics in its own right. This should not come as a surprise. Human culture requires metaphysics. The issue is to distinguish good from bad metaphysics.

-

An understanding of society based on dichotomies like primitive vs. modern, capitalist vs. socialist, industrial vs. agrarian, progressive vs. reactionary, divided by class vs. classless, or with a state vs. stateless prevents the development of a definition that comes closer to the truth of social nature. Dichotomies of this sort distance us from social truth.

-

To re-create society is to play the modern god. More precisely, each time society is recreated there is a tendency to form a new capital and power-state monopoly. Much like medieval theism was ideologically connected to absolute monarchies (sultanates and shāhanshāhs), modern social engineering as recreation is essentially the divine disposition and ideology of the nation-state. Positivism in this regard is modern theism.

-

Revolutions cannot be interpreted as the re-creation acts of society. When thusly understood they cannot escape positivist theism. Revolutions can only be defined as social revolutions to the extent that they free society from excessive burden of capital and power.

-

The task of revolutionaries cannot be defined as creating any social model of their making but more correctly as playing a role in contributing to the development of moral and political society.

-

Methods and paradigms to be applied to social nature should not be identical to those that relate to first nature. While the universalist approach to first nature provides results that come closer to the truth (I don’t believe there is an absolute truth), relativism in relation to social nature may get us closer to the truth. The universe can neither be explained by an infinite universalist linear discourse or by a concept of infinite similar circular cycles.

-

A social regime of truth needs to be reorganized on the basis of these and many other criticisms. Obviously, I am not talking about a new divine creation, but I do believe that the greatest feature of the human mind is the power to search for and build truth.

A new social science

In light of these criticisms, I offer the following suggestions in relation to the social science system that I want to define:

A more humane social nature

-

I would not present social nature as a rigid universalist truth with mythological, religious, metaphysical, and scientific (positivist) patterns. Understanding it to be the most flexible form of basic universal entities that encompass a wealth of diversities but are tied down to conditions of historical time and location more closely approaches the truth. Any analysis, social science, or attempt to make practical change without adequate knowledge of the qualities of social nature may well backfire. The monotheistic religions and positivism, which have appeared throughout the history of civilization claiming to have found the solution, were unable to prevent capital and power monopolies from gaining control. It is therefore their irrevocable task, if they are to contribute to moral and political society, to develop a more humane analysis based on a profound self-criticism.

-

Moral and political society is the main element that gives social nature its historical and complete meaning and represents the unity in diversity that is basic to its existence. It is the definition of moral and political society that gives social nature its character, maintains its unity in diversity, and plays a decisive role in expressing its main totality and historicity. The descriptors commonly used to define society, such as primitive, modern, slave-owning, feudal, capitalist, socialist, industrial, agricultural, commercial, monetary, statist, national, hegemonic, and so on, do not reflect the decisive features of social nature. On the contrary, they conceal and fragment its meaning. This, in turn, provides a base for faulty theoretical and practical approaches and actions related to society.

Protecting the social fabric

-

Statements about renewing and re-creating society are part of operations meant to constitute new capital and power monopolies in terms of their ideological content. The history of civilization, the history of such renewals, is the history of the cumulative accumulation of capital and power. Instead of divine creativity, the basic action the society needs most is to struggle against factors that prevent the development and functioning of moral and political social fabric. A society that operates its moral and political dimensions freely, is a society that will continue its development in the best way.

-

Revolutions are forms of social action resorted to when society is sternly prevented from freely exercising and maintaining its moral and political function. Revolutions can and should be accepted as legitimate by society only when they do not seek to create new societies, nations, or states but to restore moral and political society its ability to function freely.

-

Revolutionary heroism must find meaning through its contributions to moral and political society. Any action that does not have this meaning, regardless of its intent and duration, cannot be defined as revolutionary social heroism. What determines the role of individuals in society in a positive sense is their contribution to the development of moral and political society.

-

No social science that hopes to develop these key features through profound research and examination should be based on a universalist linear progressive approach or on a singular infinite cyclical relativity. In the final instance, instead of these dogmatic approaches that serve to legitimize the cumulative accumulation of capital and power throughout the history of civilization, social sciences based on a non-destructive dialectic methodology that harmonizes analytical and emotional intelligence and overcomes the strict subject-object mold should be developed.

The framework of moral and political society

The paradigmatic and empirical framework of moral and political society, the main unit of the democratic civilization system, can be presented through such hypotheses. Let me present its main aspects:

-

Moral and political society is the fundamental aspect of human society that must be continuously sought. Society is essentially moral and political.

-

Moral and political society is located at the opposite end of the spectrum from the civilization systems that emerged from the triad of city, class, and state (which had previously been hierarchical structures).

-

Moral and political society, as the history of social nature, develops in harmony with the democratic civilization system.

-

Moral and political society is the freest society. A functioning moral and political fabric and organs is the most decisive dynamic not only for freeing society but to keep it free. No revolution or its heroines and heroes can free the society to the degree that the development of a healthy moral and political dimension will. Moreover, revolution and its heroines and heroes can only play a decisive role to the degree that they contribute to moral and political society.

-

A moral and political society is a democratic society. Democracy is only meaningful on the basis of the existence of a moral and political society that is open and free. A democratic society where individuals and groups become subjects is the form of governance that best develops moral and political society. More precisely, we call a functioning political society a democracy. Politics and democracy are truly identical concepts. If freedom is the space within which politics expresses itself, then democracy is the way in which politics is exercised in this space. The triad of freedom, politics, and democracy cannot lack a moral basis. We could refer to morality as the institutionalized and traditional state of freedom, politics, and democracy.

-

Moral and political societies are in a dialectical contradiction with the state, which is the official expression of all forms of capital, property, and power. The state constantly tries to substitute law for morality and bureaucracy for politics. The official state civilization develops on one side of this historically ongoing contradiction, with the unofficial democratic civilization system developing on the other side. Two distinct typologies of meaning emerge. Contradictions may either grow more violent and lead to war or there may be reconciliation, leading to peace.

-

Peace is only possible if moral and political society forces and the state monopoly forces have the will to live side by side unarmed and with no killing. There have been instances when rather than society destroying the state or the state destroying society, a conditional peace called democratic reconciliation has been reached. History doesn’t take place either in the form of democratic civilization—as the expression of moral and political society—or totally in the form of civilization systems—as the expression of class and state society. History has unfolded as intense relationship rife with contradiction between the two, with successive periods of war and peace. It is quite utopian to think that this situation, with at least a five-thousand-year history, can be immediately resolved by emergency revolutions. At the same time, to embrace it as if it is fate and cannot be interfered with would also not be the correct moral and political approach. Knowing that struggles between systems will be protracted, it makes more sense and will prove more effective to adopt strategic and tactical approaches that expand the freedom and democracy sphere of moral and political society.

-

Defining moral and political society in terms of communal, slave-owning, feudal, capitalist, and socialist attributes serves to obscure rather than elucidate matters. Clearly, in a moral and political society there is no room for slave-owning, feudal, or capitalist forces, but, in the context of a principled reconciliation, it is possible to take an aloof approach to these forces, within limits and in a controlled manner. What’s important is that moral and political society should neither destroy them nor be swallowed up by them; the superiority of moral and political society should make it possible to continuously limit the reach and power of the central civilization system. Communal and socialist systems can identify with moral and political society insofar as they themselves are democratic. This identification is, however, not possible, if they have a state.

-

Moral and political society cannot seek to become a nation-state, establish an official religion, or construct a non-democratic regime. The right to determine the objectives and nature of society lies with the free will of all members of a moral and political society. Just as with current debates and decisions, strategic decisions are the purview of society’s moral and political will and expression. The essential thing is to have discussions and to become a decision-making power. A society who holds this power can determine its preferences in the soundest possible way. No individual or force has the authority to decide on behalf of moral and political society, and social engineering has no place in these societies.

Liberating democratic civilization from the State

When viewed in the light of the various broad definitions I have presented, it is obvious that the democratic civilization system—essentially the moral and political totality of social nature—has always existed and sustained itself as the flip side of the official history of civilization. Despite all the oppression and exploitation at the hands of the official world-system, the other face of society could not be destroyed. In fact, it is impossible to destroy it. Just as capitalism cannot sustain itself without noncapitalist society, civilization— the official world system— also cannot sustain itself without the democratic civilization system. More concretely the civilization with monopolies cannot sustain itself without the existence of a civilization without monopolies. The opposite is not true. Democratic civilization, representing the historical flow of the system of moral and political society, can sustain itself more comfortably and with fewer obstacles in the absence of the official civilization.

I define democratic civilization as a system of thought, the accumulation of thought, and the totality of moral rules and political organs. I am not only talking about a history of thought or the social reality within a given moral and political development. The discussion does, however, encompass both issues in an intertwined manner. I consider it important and necessary to explain the method in terms of democratic civilization’s history and elements, because this totality of alternate discourse and structures are prevented by the official civilization. I will address these issues in subsequent sections.

Recommended Books

- Core Texts

- Philosophy

- Lunarpunk

- (Geo)-politics

- Philosophy of technology

- History - Myth - Religion

- Python

- C

- Rust

- Mathematics

- Cryptography

- Miscellaneous

Core Texts

- Manifesto for a Democratic Civilization parts 1, 2 & 3 by Ocalan. This are a good high level overview of history, philosophy and spiritualism talking about the 5000 year legacy of state civilization, the development of philosophy and humanity's relationship with nature.

- New Paradigm in Macroeconomics by Werner explains how economics and finance work on a fundamental level. Emphasizes the importance of economic networks in issuing credit, and goes through all the major economic schools of thought.

- Authoritarian vs Democratic Technics by Mumford is a short 10 page summary of his books The Myth of the Machine parts 1 & 2. Mumford was a historian and philosopher of science and technology. His books describe the two dominant legacies within technology; one enslaving humanity, and the other one liberating humanity from the state.

- GNU and Free Software texts

Philosophy

- The Story of Philosophy by Will Durant

- The Sovereign Individual is very popular among crypto people. Makes several prescient predictions including about cryptocurrency, algorithmic money and the response by nation states against this emeregent technology. Good reading to understand the coming conflict between cryptocurrency and states.

- Jean Baudrillard, Spirit of Terrorism

Lunarpunk

- Carl Schmitt, Theory of a Partisan

- Ernst Junger, Forest Passage

- Nietzsche, Thus Spoke Zarathustra

- Nietzsche, Will to Power

- Deleuze, Nietzsche and Philosophy

- Mircea Eliade, Patterns in Comparative Religion

- Abdullah Ocalan, Sociology of Freedom

(Geo)-politics

- Desmond Fennell, Beyond Nationalism

- Alexander Dugin, The Forth Political Theory

- Padraig Pearse, Collected Works

Philosophy of technology

- Ted Kaczynski, Anti-Tech Revolution

- Nick Land, Fanged Noumena

- Ivan Illich, Tools for Conviviality

- Heidegger, The Question Concerning Technology

- Yuk Hui, The Question Concerning Technology in China

History - Myth - Religion

- Alain de Benoist, On Being a Pagan

- Peter Berresford Ellis, A Brief History of the Druids

- Mircea Eliade, The Myth of the Eternal Return

Python

- Python Crash Course by Eric Matthes. Good beginner text.

- O'Reilly books: Python Data Science, Python for Data Analysis

C

- The C Programming Language by K&R (2nd Edition ANSI C)

Rust

- The Rust Programming Language from No Starch Press. Good intro to learn Rust.

- Rust for Rustaceans from No Starch Press is an advanced Rust book.

Mathematics

Abstract Algebra

- Pinter is your fundamental algebra text. Everybody should study this book. My full solutions here.

- Basic Abstract Algebra by Dover is also a good reference.

- Algebra by Dummit & Foote. The best reference book you will use many times. Just buy it.

- Algebra by Serge Lang. More advanced algebra book but often contains material not found in the D&F book.

Elliptic Curves

- Washington is a standard text and takes a computational approach. The math is often quite obtuse because he avoids introducing advanced notation, instead keeping things often in algebra equations.

- Silverman is the best text but harder than Washington. The material however is rewarding.

Algebraic Geometry

- Ideals, Varieties and Algorithms by Cox, Little, O'Shea. They have a follow up advanced graduate text called Using Algebraic Geometry. It's the sequel book explaining things that were missing from the first text.

- Hartshorne is a famous text.

Commutative Algebra

- Atiyah-MacDonald. Many independent solution sheets online if you search for them. Or ask me ;)

Algebraic Number Theory

- Algebraic Number Theory by Frazer Jarvis, chapters 1-5 (~100 pages) is your primary text. Book is ideal for self study since it has solutions for exercises.

- Introductory Algebraic Number Theory by Alaca and Williams is a bit dry but a good supplementary reference text.

- Elementary Number Theory by Jones and Jones, is a short text recommended in the preface to the Jarvis book.

- Algebraic Number Theory by Milne, are course notes written which are clear and concise.

- Short Algebraic Number Theory course, see also the lecture notes.

- Cohen book on computational number theory is a gold mine of standard algos.

- LaVeque Fundamentals of Number Theory

Cryptography

ZK

- Proofs, Arguments, and Zero-Knowledge by Justin Thaler.

Miscellaneous

- Cryptoeconomics by Eric Voskuil.

Compiling and Running a Node

DISCLAIMER: This is a work in progress and functionalities may not be available on the current deployed testnet as of 22-May-2025.

Please read the whole document first before executing commands, to understand all the steps required and how each component operates. Unless instructed otherwise, each daemon runs on its own shell, so don't stop a running one to start another.

Each command to execute will be inside a codeblock, on its first line,

marked by the user $ symbol, followed by the expected output. For

longer command outputs, some lines will be emmited to keep the guide

simple.

We also strongly suggest to first execute next guide steps on a local environment to become familiar with each command, before broadcasting transactions to the actual network.

Overview

This tutorial will cover the three DarkFi blockchain components and their current features. The components covered are:

darkfidis the DarkFi fullnode. It validates blockchain transactions and stays connected to the p2p network.drkis a CLI wallet. It provides an interface to smart contracts such as Money and DAO, manages our keys and coins, and scans the blockchain to update our balances.minerdis the DarkFi mining daemon. Connects todarkfidover RPC, and requests new block headers to mine.

The config files for all three daemons are sectioned into three parts,

each marked [network_config]. The sections look like this:

[network_config."testnet"][network_config."mainnet"][network_config."localnet"]

At the top of each daemon config file, we can modify the network being used by changing the following line:

# Blockchain network to use

network = "testnet"

This enables us to configure the daemons for different contexts, namely mainnet, testnet and localnet. Mainnet is not active yet. Localnet can be setup by following the instructions here. The rest of this tutorial assumes we are setting up a testnet node.

Compiling

Since this is still an early phase, we will not be installing any of the software system-wide. Instead, we'll be running all the commands from the git repository, so we're able to easily pull any necessary updates.

Refer to the main DarkFi page for instructions on how to install Rust and necessary deps. Skip last step of the build process, as you don't need to compile all binaries of the project.

Once you have the repository in place, and everything is installed, we

can compile the darkfid node and the drk wallet CLI:

$ make darkfid drk

...

make -C bin/darkfid \

PREFIX="/home/anon/.cargo" \

CARGO="cargo" \

RUST_TARGET="x86_64-unknown-linux-gnu" \

RUSTFLAGS=""

make[1]: Entering directory '/home/anon/darkfi/bin/darkfid'

RUSTFLAGS="" cargo build --target=x86_64-unknown-linux-gnu --release --package darkfid

...

Compiling darkfid v0.5.0 (/home/anon/darkfi/bin/darkfid)

Finished `release` profile [optimized] target(s) in 4m 19s

cp -f ../../target/x86_64-unknown-linux-gnu/release/darkfid darkfid

cp -f ../../target/x86_64-unknown-linux-gnu/release/darkfid ../../darkfid

make[1]: Leaving directory '/home/anon/darkfi/bin/darkfid'

make -C bin/drk \

PREFIX="/home/anon/.cargo" \

CARGO="cargo" \

RUST_TARGET="x86_64-unknown-linux-gnu" \

RUSTFLAGS=""

make[1]: Entering directory '/home/anon/darkfi/bin/drk'

RUSTFLAGS="" cargo build --target=x86_64-unknown-linux-gnu --release --package drk

...

Compiling drk v0.5.0 (/home/anon/darkfi/bin/drk)

Finished `release` profile [optimized] target(s) in 2m 16s

cp -f ../../target/x86_64-unknown-linux-gnu/release/drk drk

cp -f ../../target/x86_64-unknown-linux-gnu/release/drk ../../drk

make[1]: Leaving directory '/home/anon/darkfi/bin/drk'

This process will now compile the node and the wallet CLI tool.

When finished, we can begin using the network. Run darkfid and drk

once so their config files are spawned on your system. These config files

will be used to darkfid and drk.

Please note that the exact paths may differ depending on your local setup.

$ ./darkfid

Config file created in "~/.config/darkfi/darkfid_config.toml". Please review it and try again.

$ ./drk interactive

Config file created in "~/.config/darkfi/drk_config.toml". Please review it and try again.

Running

Using Tor

DarkFi supports Tor for network-level anonymity. To use the testnet over

Tor, you'll need to make some modifications to the darkfid config

file.

For detailed instructions and configuration options on how to do this,

follow the Tor Guide.

The guide is using darkirc port 25552 for seeds and 25551 for

torrc configuration, so in your actual configuration replace them

with darkfid ones, where seeds use port 8343 and torrc should

use port 8342.

Wallet initialization

Now it's time to initialize your wallet. For this we use drk, a separate

wallet CLI which is created to interface with the smart contract used

for payments and swaps.

First, you need to change the password in the drk config. Open

your config file in a text editor (the default path is

~/.config/darkfi/drk_config.toml). Look for the section marked

[network_config."testnet"] and change this line:

# Password for the wallet database

wallet_pass = "changeme"

Once you've changed the default password for your testnet wallet, we can proceed with the wallet initialization. We simply have to initialize a wallet, and create a keypair. The wallet address shown in the outputs is examplatory and will different from the one you get.

$ ./drk wallet initialize

Initializing Money Merkle tree

Successfully initialized Merkle tree for the Money contract

Generating alias DRK for Token: 241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLssb

Initializing DAO Merkle trees

Successfully initialized Merkle trees for the DAO contract

$ ./drk wallet keygen

Generating a new keypair

New address:

DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf

$ ./drk wallet default-address 1

The second command will print out your new DarkFi address where you can receive payments. Take note of it. Alternatively, you can always retrieve your default address using:

$ ./drk wallet address

DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf

Miner

It's not necessary for broadcasting transactions or proceeding with the

rest of the tutorial (darkfid and drk handle this), but if you want

to help secure the network, you can participate in the mining process

by running the native minerd mining daemon.

To mine on DarkFI we need to add a recipient to minerd that specifies

where the mining rewards will be minted to.

First, compile it:

$ make minerd

...

make -C bin/minerd \

PREFIX="/home/anon/.cargo" \

CARGO="cargo" \

RUST_TARGET="x86_64-unknown-linux-gnu" \

RUSTFLAGS=""

make[1]: Entering directory '/home/anon/darkfi/bin/minerd'

RUSTFLAGS="" cargo build --target=x86_64-unknown-linux-gnu --release --package minerd

...

Compiling minerd v0.5.0 (/home/anon/darkfi/bin/minerd)

Finished `release` profile [optimized] target(s) in 1m 25s

cp -f ../../target/x86_64-unknown-linux-gnu/release/minerd minerd

cp -f ../../target/x86_64-unknown-linux-gnu/release/minerd ../../minerd

make[1]: Leaving directory '/home/anon/darkfi/bin/minerd'

This process will now compile the mining daemon. When finished, run

minerd once so that it spawns its config file on your system. This

config file is used to configure minerd. You can define how many

threads will be used for mining. RandomX can use up to 2080 MiB of

shared memory, so configure minerd to not consume all your system

available memory. Refer to ram consumption section

to see expected totals using various configurations, so you can

configure your minerd accordingly.

$ ./minerd

Config file created in "~/.config/darkfi/minerd_config.toml". Please review it and try again.

You now have to configure minerd to use your wallet address as the

rewards recipient, when it retrieves blocks from darkfid to mine.

Open your minerd config file with a text editor (the default path

is ~/.config/darkfi/minerd_config.toml) and replace the

YOUR_WALLET_ADDRESS_HERE string with your drk wallet address:

# Put the address from `./drk wallet address` here

recipient = "YOUR_WALLET_ADDRESS_HERE"

You can retrieve your drk wallet address as follows:

$ ./drk wallet address

DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf

Notes:

When modifying the

minerdconfig file to use with the testnet, be sure to change the values under the section marked[network_config."testnet"](not localnet or mainnet!).If you are not on the same network as the

darkfidinstance you are using, you must configure and usetcp+tlsfor the RPC endpoints, so your traffic is not plaintext, as it contains your wallet address used for the block rewards.

Once that's in place, you can run it again and minerd will start,

polling darkfid for new block headers to mine.

$ ./minerd

14:20:06 [INFO] Starting DarkFi Mining Daemon...

14:20:06 [INFO] Initializing a new mining daemon...

14:20:06 [INFO] Mining daemon initialized successfully!

14:20:06 [INFO] Starting mining daemon...

14:20:06 [INFO] Mining daemon started successfully!

14:20:06 [INFO] Received new job to mine block header beb0...42aa with key 0edc...0679 for target: 0xffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff

14:20:06 [INFO] Mining block header beb0...42aa with key 0edc...0679 for target: 0xffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff

14:20:06 [INFO] Mined block header beb0...42aa with nonce: 1

14:20:06 [INFO] Mined block header hash: 36fe...753c

14:20:06 [INFO] Submitting solution to darkfid...

14:20:06 [INFO] Submition result: accepted

...

Minerd configuration

minerd configuration file provides several ways to optimize RAM

utilization and mining hashrate. This section will describe some of the

provided configuration flags, along with a table at the end showcasing

total daemon RAM consuption using each. By default, minerd is running

using fast-mode, where 2080 MiB of shared memory is required for

the RandomX dataset. To enable or disable a flag, simply set its value

to true or false in the configuration file and restart minerd.

Read what each flag does before enabling it.

light-mode

In this mode, minerd will run using only 256 MiB of shared memory,

but in will run significantly slower, with huge impact on hashrate. It

is used mainly for verification, not mining, but its available in case

system resources are ultra limited.

large-pages

Huge Pages, also known as Large Pages (on Windows) and

Super Pages (on BSD or macOS) is the process of reserving RAM

with larger than default chunk (page) size, which give the CPU/OS fewer

entries to look-up, and increases mining hashrate performance up to

50%. General recommendations is 1280 pages for RandomX. Please note

1280 pages means 2560 MiB of memory will be reserved for huge pages

and become not available for other usage. Before enabling the flag, we

must reserve the huge pages, otherwise minerd will gives us an

allocation error.

To temporary (until next reboot) reserve huge pages, execute as root:

# sysctl -w vm.nr_hugepages=1280

To verify huge pages have been reserved, execute:

$ grep Huge /proc/meminfo

...

HugePages_Total: 1280

HugePages_Free: 1280

...

To permantly reserve huge pages, you need to modify your boot

configuration. In this example, we will use grub to reserve the

pages.

Note: Before proceeding, take a backup of your current

grubconfiguration file and be extra carefull on following the instructions, as mistakes will result in your system not booting.

Open your current grub configuration file (the default path is

/etc/default/grub), find GRUB_CMDLINE_LINUX_DEFAULT option and

append hugepagesz=2MB hugepages=1280 at the end of its parameters.

The option should look like this:

GRUB_CMDLINE_LINUX_DEFAULT="{YOUR_PREVIOUS_PARAMETERS} hugepagesz=2MB hugepages=1280"

Save the file and execute as root:

# update-grub

Now you can reboot your system and huge pages will be reserved. If you

want to revert, just remove the huge pages parameters from your grub

configuration, update it and reboot.

RAM consumption

Here is a table showcasing minerd daemon total RAM consumption in MiB

using various threads count for mining and optimization flags.

| Threads | light-mode | fast-mode | fast-mode + large-pages |

|---|---|---|---|

| 1 | 271 | 2351 | 14.0 |

| 4 | 277 | 2357 | 14.2 |

| 8 | 285 | 2365 | 14.4 |

Note: The last column shows sow low RAM consumption because the dataset is allocated in the already reserved system huge pages.

Darkfid

Now that darkfid configuration is in place, you can run it again and

darkfid will start, create the necessary keys for validation of blocks

and transactions, and begin syncing the blockchain.

$ ./darkfid

14:23:23 [INFO] Initializing DarkFi node...

14:23:23 [INFO] Node is configured to run with fixed PoW difficulty: 1

14:23:23 [INFO] Initializing a Darkfi daemon...

14:23:23 [INFO] Initializing Validator

14:23:23 [INFO] Initializing Blockchain

14:23:23 [INFO] Deploying native WASM contracts

14:23:23 [INFO] Deploying Money Contract with ContractID BZHKGQ26bzmBithTQYTJtjo2QdCqpkR9tjSBopT4yf4o

14:23:29 [INFO] Successfully deployed Money Contract

14:23:29 [INFO] Deploying DAO Contract with ContractID Fd8kfCuqU8BoFFp6GcXv5pC8XXRkBK7gUPQX5XDz7iXj

...

As its syncing, you'll see periodic messages like this:

...

[INFO] Blocks received: 4020/4763

...

This will give you an indication of the current progress. Keep it running,

and you should see a Blockchain synced! message after some time.

If you're running minerd, you should see a notification like this:

...

[INFO] [RPC] Server accepted conn from tcp://127.0.0.1:44974/

...

This means that darkfid and minerd are connected over RPC and

minerd can start mining. You will see log messages like these:

...

14:23:56 [INFO] [RPC] Created new blocktemplate: address=9vw6...fG1U, spend_hook=-, user_data=-, hash=beb0...42aa

14:24:04 [INFO] [RPC] Got solution submission for block template: beb0...42aa

14:24:06 [INFO] [RPC] Mined block header hash: 36fe...753c

14:24:06 [INFO] [RPC] Proposing new block to network

...

When darkfid and minerd are correctly connected and you get an

error on minerd like this:

...

[ERROR] Failed mining block header b757...5fb1 with error: Miner task stopped

...

That's expected behavior. It means your setup is correct and you are

mining blocks. Failed mining block header happens when a new block

was received by darkfid, extending the current best fork, so when

minerd polls it again it retrieves the new block header to mine,

interupting current mining workers to start mining the new one.

Otherwise, you'll see a notification like this:

...

[INFO] Mined block header 36fe...753c with nonce: 266292

...

Which means the current height block has been mined succesfully by

minerd and propagated to darkfid so it can broadcast it to the

network.

Wallet sync

From this point forward in the guide we will use drk in interactive

mode for all our wallet operations. In another terminal, run the

following command:

$ ./drk interactive

drk>

In order to receive incoming coins, you'll need to use the drk

tool to subscribe on darkfid so you can receive notifications for

incoming blocks. The blocks have to be scanned for transactions,

and to find coins that are intended for you. In the interactive shell,

run the following command to subscribe to new blocks:

drk> subscribe

Requested to scan from block number: 0

Last confirmed block reported by darkfid: 1 - da4455f461df6833a68b659d1770f58e44b6bc4abdd934cb22d084c24333255f

Requesting block 0...

Block 0 received! Scanning block...

=======================================

Header {

Hash: b967812a860e8bf43deb03dd4f7cf69258f7719ddb7f2183d4e4fa3559b9f39d

Version: 1

Previous: 86bbac430a4b3a182f125b37a486e9c486bbfa34d84ef4a66b4a23e5f0c625b1

Height: 0

Timestamp: 2025-05-12T13:00:24

Nonce: 0

Transactions Root: 0x081361c364feba0d28a418e2e20c216ce442d5127036e3491ceaf1996fdb3c3b

State Root: afc1694dd6b290d8b92c33d3fc746707da9bed857eb9e90f11683d2e243b8047

Proof of Work data: Darkfi

}

=======================================

[scan_block] Iterating over 1 transactions

[scan_block] Processing transaction: 91525ff00a3755a8df93c626b59f6e36cf021d85ebccecdedc38f3f1890a15fc

Requesting block 1...

Block 1 received! Scanning block...

...

Requested to scan from block number: 2

Last confirmed block reported by darkfid: 1 - da4455f461df6833a68b659d1770f58e44b6bc4abdd934cb22d084c24333255f

Finished scanning blockchain

Subscribing to receive notifications of incoming blocks

Detached subscription to background

All is good. Waiting for block notifications...

Local Deployment

For local (non-testnet) development we recommend running master, and

use the existing contrib/localnet/darkfid-single-node folder, which

provides the corresponding configurations to operate. Some outputs are

emitted since they are identical to previous steps.

First, compile darkfid node, minerd mining daemon and the drk

wallet CLI:

$ make darkfid minerd drk

Enter the localnet folder, and initialize a wallet:

$ cd contrib/localnet/darkfid-single-node/

$ ./init-wallet.sh

Then start the daemons and wait until darkfid is initialized:

$ ./tmux_sessions.sh

After some blocks have been generated we

will see some DRK in our test wallet.

On a different shell(or tmux pane in the session),

navigate to contrib/localnet/darkfid-single-node

folder again and check wallet balance

$ ./wallet-balance.sh

Token ID | Aliases | Balance

----------------------------------------------+---------+---------

241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLssb | DRK | 20

Don't forget that when using this local node, all operations

should be executed inside the contrib/localnet/darkfid-single-node

folder, and ./drk command to be replaced by

../../../drk -c drk.toml. All paths should be relative to this one.

Advanced Usage

To run a node in full debug mode:

$ LOG_TARGETS='!sled,!rustls,!net' ./darkfid -vv | tee /tmp/darkfid.log

The sled and net targets are very noisy and slow down the node so

we disable those.

We can now view the log, and grep through it.

$ tail -n +0 -f /tmp/darkfid.log | grep -a --line-buffered -v DEBUG

Native token

Now that you have your wallet set up, you will need some native DRK

tokens in order to be able to perform transactions, since that token

is used to pay the transaction fees. You can obtain DRK either by

successfully mining a block that gets confirmed or by asking for some

by the community on darkirc and/or your comrades. Don't forget to

tell them to add the --half-split flag when they create the transfer

transaction, so you get more than one coins to play with.

After you request some DRK and the other party submitted a

transaction to the network, it should be in the consensus' mempool,

waiting for inclusion in the next block(s). Depending on your network

configuration, confirmation of the blocks could take some time. You'll

have to wait for this to happen. If your drk subscription is running,

then after some time your new balance should be in your wallet.

You can check your wallet balance using drk:

drk> wallet balance

Token ID | Aliases | Balance

----------------------------------------------+---------+---------

241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLssb | DRK | 20

Creating tokens

On the DarkFi network, we're able to mint custom tokens with some supply. To do this, we need to generate a mint authority keypair, and derive a token ID from it. The tokens shown in the outputs are placeholders for the ones that will be generated from you. In rest of the guide, use the ones you generated by replacing the corresponding placeholder. We can simply create our own tokens by executing the following command:

drk> token generate-mint

Successfully imported mint authority for token ID: {TOKEN1}

This will generate a new token mint authority and will tell you what your new token ID is.

You can list your mint authorities with:

drk> token list

Token ID | Aliases | Mint Authority | Token Blind | Frozen | Freeze Height

----------+---------+-------------------------+----------------+--------+---------------

{TOKEN1} | - | {TOKEN1_MINT_AUTHORITY} | {TOKEN1_BLIND} | false | -

For this tutorial we will need two tokens so execute the command again to generate another one.

drk> token generate-mint

Successfully imported mint authority for token ID: {TOKEN2}

Verify you have two tokens by running:

drk> token list

Token ID | Aliases | Mint Authority | Token Blind | Frozen | Freeze Height

----------+---------+-------------------------+----------------+--------+---------------

{TOKEN1} | - | {TOKEN1_MINT_AUTHORITY} | {TOKEN1_BLIND} | false | -

{TOKEN2} | - | {TOKEN2_MINT_AUTHORITY} | {TOKEN2_BLIND} | false | -

Aliases

To make our life easier, we can create token ID aliases, so when we are performing transactions with them, we can use that instead of the full token ID. Multiple aliases per token ID are supported.

The native token alias DRK should already exist, and we can use that

to refer to the native token when executing transactions using it.

We can also list all our aliases using:

drk> alias show

Alias | Token ID

-------+----------------------------------------------

DRK | 241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLssb

Note: these aliases are only local to your machine. When exchanging with other users, always verify that your aliases' token IDs match.

Now let's add the two token IDs generated earlier to our aliases:

drk> alias add ANON {TOKEN1}

Generating alias ANON for Token: {TOKEN1}

drk> alias add DAWN {TOKEN2}

Generating alias DAWN for Token: {TOKEN2}

drk> alias show

Alias | Token ID

-------+---------------------------------------------

ANON | {TOKEN1}

DAWN | {TOKEN2}

DRK | 241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLss

Mint transaction

Now let's mint some tokens for ourselves. First grab your wallet address, and then create the token mint transaction, and finally - broadcast it:

drk> wallet address

{YOUR_ADDRESS}

By default the transaction will be printed in the terminal. Interactive

mode supports UNIX style pipes and exporting/importing to/from files.

We can either export a transaction to a file by appending

> {tx_file_name}.tx, or broadcast it right away by appending

| broadcast. We will broadcast all transactions in the guide, for

simplicity.

drk> token mint ANON 42.69 {YOUR_ADDRESS} | broadcast

[mark_tx_spend] Processing transaction: e9ded45928f2e2dbcb4f8365653220a8e2346987dd8b75fe1ffdc401ce0362c2

[mark_tx_spend] Found Money contract in call 0

[mark_tx_spend] Found Money contract in call 1

[mark_tx_spend] Found Money contract in call 2

Broadcasting transaction...

Transaction ID: e9ded45928f2e2dbcb4f8365653220a8e2346987dd8b75fe1ffdc401ce0362c2

drk> token mint DAWN 20.0 {YOUR_ADDRESS} | broadcast

[mark_tx_spend] Processing transaction: e404241902ba0a8825cf199b3083bff81cd518ca30928ca1267d5e0008f32277

[mark_tx_spend] Found Money contract in call 0

[mark_tx_spend] Found Money contract in call 1

[mark_tx_spend] Found Money contract in call 2

Broadcasting transaction...

Transaction ID: e404241902ba0a8825cf199b3083bff81cd518ca30928ca1267d5e0008f32277

Now the transaction should be published to the network. When the transaction is confirmed, your wallet should have your new tokens listed when you run:

drk> wallet balance

Token ID | Aliases | Balance

----------------------------------------------+---------+-------------

241vANigf1Cy3ytjM1KHXiVECxgxdK4yApddL8KcLssb | DRK | 19.98451279

{TOKEN1} | ANON | 42.69

{TOKEN2} | DAWN | 20

Freeze transaction

We can lock a tokens supply dissallowing further mints by executing:

drk> token freeze DAWN | broadcast

[mark_tx_spend] Processing transaction: 138274448ac3af26f253e0a40d0964dc125b99b3c826ba321bcb989cabfb6df6

[mark_tx_spend] Found Money contract in call 0

[mark_tx_spend] Found Money contract in call 1

Broadcasting transaction...

Transaction ID: 138274448ac3af26f253e0a40d0964dc125b99b3c826ba321bcb989cabfb6df6

After the transaction has been confirmed, we will see the token freeze

flag set to true, along with the block height it was frozen on:

drk> token list

Token ID | Aliases | Mint Authority | Token Blind | Frozen | Freeze Height

----------+---------+-------------------------+----------------+--------+---------------

{TOKEN1} | ANON | {TOKEN1_MINT_AUTHORITY} | {TOKEN1_BLIND} | false | -

{TOKEN2} | DAWN | {TOKEN2_MINT_AUTHORITY} | {TOKEN2_BLIND} | true | 4

Payments

Using the tokens we minted, we can make payments to other addresses.

We will use a dummy recepient, but you can also test this with friends.

Let's try to send some ANON tokens to

DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf:

drk> transfer 2.69 ANON DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf | broadcast

[mark_tx_spend] Processing transaction: 47b4818caec22470427922f506d72788233001a79113907fd1a93b7756b07395

[mark_tx_spend] Found Money contract in call 0

[mark_tx_spend] Found Money contract in call 1

Broadcasting transaction...

Transaction ID: 47b4818caec22470427922f506d72788233001a79113907fd1a93b7756b07395

On success we'll see a transaction ID. Now again the same confirmation

process has to occur and

DZnsGMCvZU5CEzvpuExnxbvz6SEhE2rn89sMcuHsppFE6TjL4SBTrKkf will receive

the tokens you've sent.

We can see the spent coin in our wallet.

drk> wallet coins

Coin | Spent | Token ID | Aliases | Value | Spend Hook | User Data | Spent TX

-----------------+-------+-----------------+---------+--------------------------+------------+-----------+-----------------

EGV6rS...pmmm6H | true | 241vAN...KcLssb | DRK | 2000000000 (20) | - | - | fbbd7a...5f2b19

...

47QnyR...1T7igm | true | {TOKEN1} | ANON | 4269000000 (42.69) | - | - | 47b481...b07395

5UUJbH...trdQHY | false | {TOKEN1} | ANON | 4000000000 (40) | - | - | -

EEneNB...m6mxTC | false | 241vAN...KcLssb | DRK | 1999442971 (19.97253683) | - | - | -

We have to wait until the next block to see our change balance reappear in our wallet.

drk> wallet balance

Token ID | Aliases | Balance